DrivePool BETA M4 has been out for a while now and is nearing a final release.

You can grab the public beta right here:

http://wiki.covecube.com/StableBit_DrivePool

In this post let’s delve into how file duplication works in DrivePool M4.

Real-time file duplication

I’ve received a number of questions regarding this topic, so let me start by laying out what file duplication accomplishes.

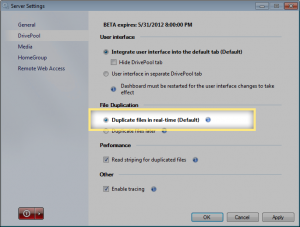

DrivePool M4 actually can maintain duplicate copies of files in 2 different ways, real-time and by duplicating later. Let’s talk about the real-time duplication option first, because that will be what the majority of users will be using and it’s also the default mode out of the box.

What does it do?

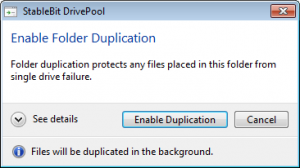

File duplication protects files placed in a duplicated folder from any single drive failure at a time. That means that if one drive fails, all the files in all the duplicated folders will remain intact.

Does file duplication constantly chew up resources in the background duplicating files?

Under normal circumstances, real-time file duplication does not require any background maintenance in order to keep your files duplicated.

Files are simply updated in parallel across multiple disks, every time something writes to them or modifies them in any way.

What about changing the level of duplication of an existing folder?

You can do that from the Dashboard for any folder on the pool.

There is a background duplication process that will kick off and creates new duplicated file parts for each file in that folder or cleans up existing duplicated file parts if you choose to disable folder duplication.

This process runs completely in the background and is a one time pass. When the process completes, all the duplicates files are in the correct state for the real-time duplication engine to take over.

You might be wondering what would happen if you changed the duplication level of another folder while the background duplication process is already running. Don’t worry, it will handle that properly and duplicate or de-duplicate all your files as needed.

How do background duplication changes affect disk performance?

All background duplication changes are processed using a Windows feature called Background I/O. This feature was introduced with Windows Vista and allows applications to perform intensive disk tasks, such as copying large files around, without impacting the overall disk performance. Windows accomplishes this by prioritizing the actual I/O operations in the kernel, so this is pretty effective. But of course in the real world you may notice a slight performance hit when background duplication is running.

After you change the duplication state of a folder, the time to complete a duplication pass depends on how busy your server is. This means that it might take a while for the background duplication pass to complete if your server is busy doing something else.

Future versions will optimize this even more.

Will background duplication lock files as it makes duplicate copies of them?

No it won’t.

This is actually pretty cool and is available as of build 5805. DrivePool’s background duplication engine does not lock any files while it’s working on them. What it does is, monitor the file for any other applications that might want to alter it and if it detects such an occurrence it will cleanly back out of whatever it was doing to that file and yield to the other application.

The other application doesn’t see anything out of the ordinary at all when it tries to, for example, write to a file that is currently being duplicated.

DrivePool accomplishes this using standard NTFS APIs.

Duplication consistency

Now that we’ve covered the basics, let’s delve a bit deeper into the real-time duplication system and talk about how DrivePool ensures that duplication consistency is maintained at all times.

Duplication consistency means a number of things. For files (or alternate streams), it means that there should be the correct number of file parts for each file in any given directory. For example, in a duplicated directory there should be exactly 2 file parts for each file in that directory, no more and no less. In a non-duplicated directory there should be exactly one file part for each file.

A file part is simply a file residing on one of the pooled disks that the file on the pool represents.

For directories DrivePool allows more than the required number of directory parts. For example, for a duplicated directory, there could be 4 directory parts representing it and that would still be consistent. However, one directory part representing a duplicated directory is not consistent, there must be at least 2.

There is no upper limit on how many directory parts can exists. This is because DrivePool can clone any directory at any time, depending on its internal file placement strategy. As an optimization, DrivePool does not clone the entire directory structure across all disks but opts to manage and clone directories in real-time as needed. But there can never be less directory parts than required.

Ensuring consistency during real-time usage

Every time you open any file, directory or alternate stream, CoveFS checks to make sure that the correct number of file parts were found for it, given the above rules. This is done in real-time and doesn’t cost anything extra, in terms of performance, because we need to find all the file parts anyway.

If an incorrect number of file parts were found, CoveFS records the file parts that were found, and how many were expected to be found. It sends this information up to the DrivePool service, which logs the issue in its human readable log and marks the pool as “needing duplication”.

At the next available opportunity, DrivePool will start a scan of the entire pool in order to make sure that each file is consistent and will correct the problem automatically, if possible. This is called the background duplication pass, and this is what causes the “Duplicating…” message to appear.

Background duplication

Background duplication is a process that scans all the files on the pool for duplication problems. It can be triggered by changing the duplication level on an existing folder, reconnecting a missing disk to the pool, adding a disk from a different pool, and a number of other administrative tasks.

During normal shared folder usage, with real-time duplication enabled, background duplication does not need to run in order to maintain file duplication.

Not interfering with other I/O

One of the goals of background duplication is not to interfere with other disk I/O, pooled I/O or otherwise. DrivePool accomplishes this in 2 ways.

First, whenever DrivePool works on a file, for example, to restore a missing duplicated file part, it will never lock that file. This allows any other application to open the same file that DrivePool is working on and start modifying it. DrivePool qukcikly and cleanly aborts what it was doing to that particular file and moves on to the next one.

Second, the entire background duplication pass is conducted on a thread that is scheduled to use Background I/O, a Windows feature that schedules all I/O requests to yield to any other normal priority I/O request. This is far more effective than just lowering the CPU priority of a thread. The down side of doing this is that the duplication pass can take longer than it would otherwise, but with the first feature mentioned above, this is not much of a problem. Background I/O priority can be turned off using DrivePool’s advanced settings.

Checking directories and files

The background duplication check is more through than the real-time check. It goes through each file and directory and makes sure that they have the correct number of file parts and that each file part is the same.

We’ve already talked about the criteria that the real-time duplication check uses to determine how many file parts should exist for any given entity on the pool. The background duplication check uses the same rules.

After it verifies that it has the correct number of file parts, background duplication will check each file part to ensure that it’s the same. It does this by first looking at the size of the file, if the size differs then we have a duplication conflict, and we don’t go any further.

We then check the modification time on each file part, if the modification times are different then DrivePool starts to check the contents of all the file parts to make sure that they all contain the same data. It does this by performing a hash of each file part and comparing the result. If all the file parts contain the same exact data, then DrivePool synchronizes the modification times across all the file parts for the given file. If a mismatch is found in the file contents, then that triggers a duplication conflict.

Note that, at this point we’ve determined whether a file has the correct number of file parts or not, but we haven’t reacted to that information yet. We’ve simply established that all the file parts are the same.

Once we are sure that every file part is the same, we now do one of two things. We either clone existing file parts into more file parts, as needed, or we cleanup existing file parts if there are too many of them. Of course we never remove the last file part.

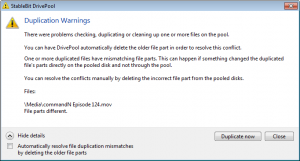

Duplication conflicts

When a duplication conflict is detected by the background duplication pass, the file name is recorded and the file is skipped. We don’t perform any automatic maintenance on files which have file parts that don’t match in terms of their data contents.

Just to clarify, this means that we never delete or duplicate file parts that have different data contents.

A warning is issued to the user in this case at the end of the background duplication run.

This condition is rare, because it should only be caused by some application or user directly accessing and modifying the pooled files on the individual pooled disks, as opposed to going through the pool.

Resolving duplication conflicts

To resolve such a conflict, you can either tell DrivePool to delete the older file parts automatically, or you can find the file parts yourself and delete the ones that are not correct.

File modification times

If you’re going to modify file parts directly, or will be running applications that modify file times, keep in mind that DrivePool uses the file modification times of the file parts contained in the pool part folders to ensure duplication consistency. If you modify these times directly (not through the pool drive), it won’t break duplication, but the next time that background duplication needs to run, it will need to re-hash every duplicated file in order to make sure that the contents haven’t changed. This can take a while.

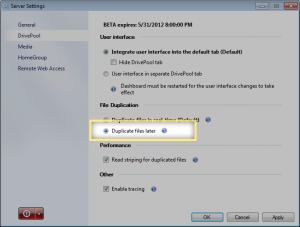

Duplicating files later

Now that we’ve talked about real-time file duplication and how it works together with background duplication, let’s talk about what it means to duplicate files later.

Duplicating files later does 3 things.

First, new files are never written to 2 disks at the same time. All new files are always written to a single disk.

Second, duplicating files later turns off the real-time duplication check. In other words, if a file is found to have an incorrect number of file parts, nothing out of the ordinary happens.

Third, a background duplication pass is scheduled to run every night in order to duplicate files that need duplicating. This background duplication pass is identical to the one that runs in the real-time duplication scenario.

Why duplicate file later?

When duplicating files in real-time, every write is issued to two file parts, in parallel. If you want to avoid the second write for performance reasons, this is another option available to you.

This might be a good option if you tend to write a lot of temporary files to duplicated folders that then get quickly deleted and so there is no real need to duplicate them.

Overall, most people should stick to the real-time duplication option as it offers instant file protection as soon as any file is copied into a duplicated folder.

Real world usage

Overall, DrivePool M4’s duplication system is designed to be as maintenance free as possible. Many tasks are automated, including recovery of missing duplicated file parts.

Anything that requires your attention will raise an alert in the Dashboard letting you know of the problem, and offer some advice on how to go about resolving it.

You’re not really required to understand its inner workings in order to make use of folder duplication, but I thought I’d share with you what is going on behind the scenes so that you can have some idea of how the system works.