StableBit DrivePool is now on the road to a stable release final. The latest build doesn’t feature much visual changes but instead is focused on refining the file system component of DrivePool.

We’re still not done with tweaking the UI, it’s just not the focus of this build.

Download the latest build here: http://stablebit.com/DrivePool/Download

CoveFS Changes

CoveFS is our high performance kernel driver written specifically to pool multiple file systems together. In this build, it has many changes to ensure compatibility with 3rd party applications, in particular Enterprise grade applications such as database engines, virtual machines and essentially any applications that rely on very heavy concurrent I/O on the pool.

For example, here is what we are now able to do with this latest build out of the box:

- StableBit DrivePool can now be checked out and built and deployed directly from the pool using SVN, Visual Studio 2012, MSBuild and some proprietary deployment scripts.

- All of the Virtual Machines used for testing now run from the pool. This means that they can instantly benefit from the performance gains obtained through read striping, and that they are protected from drive failure by file duplication.

- The Virtual Machine software used is VMWare Workstation 7.1.4 and Oracle’s VirtualBox 4.2.12.

A lot of work went into putting in the file system changes necessary for this particular build and I’ll talk about some of these changes below.

Report as NTFS by Default

After the many months of testing the DrivePool 2.0 BETA, it has become apparent that we can no longer report our file system as “CoveFS”. There are simply too many applications out there that check specifically for “NTFS”, and if they don’t find that string then they either refuse to run outright or assume that the file system is FAT based (which is typically limited to 2GB/4GB file sizes).

The latest example of this is VMWare Workstation 7. It needs the file system to be “NTFS” or else it assumes a 2GB file limit, and refuses to run. Windows 8 also has hard coded strings for “expected” file systems in some of the (reverse engineered) system code, and if a file system is not on that list it misbehaves in subtle ways.

So in the latest build, CoveFS now reports as “NTFS” by default. You can still revert to reporting as “CoveFS” by editing the service .config file.

Quirky File System Behavior

Some things in Windows are just wacky, and programs have been built to expect this wackiness or else they break. So we have to do our best to reproduce this wackiness in CoveFS, in order to maintain the best possible compatibility with these programs.

Here’s an example:

Normally in Windows, you can rename a file (or folder), even if that file is currently in use. Typically applications use this behavior to rename a file that can’t be deleted because something else is using it, perhaps you’ve even used this trick yourself. The Windows Home Server 2011 did this when you requested to move a folder to a different disk, but that folder was in use. I’m sure that there are countless other examples.

What’s the wacky part?

You can also tell Windows to open a file that’s in use and rename it to itself, while requesting to explicitly overwrite the destination.

Now how does that make any sense at all? How can you rename a file to itself, much less overwrite the destination (which is itself, and is in use by something else)?

In this case CoveFS used to tell the caller that the destination is in use and can’t be overwritten. However, NTFS just does this with no complaints. So now that’s what CoveFS does as well.

It’s these kinds of issues that make a huge difference in compatibility. This particular problem was causing VMWare to not function correctly from the pool.

Locking / Caching

One of the most important parts of any file system is how it interacts with the memory manager and the cache manager.

Locking and caching affects how EXE files are opened, how data is cached when read from or written to the disk, and how memory mapping works. Memory mapping is typically used by database or backup applications, word processors, or anything that needs to access random areas of very large files quickly.

The latest build drastically simplifies how DrivePool interacts with all of these core systems.

Our new model is much simpler. Eliminating complexity means eliminating potential for problems, so I think that this is a very important change.

File Performance was Broken

The idea behind file performance was a small pane to answer the question “what is going on on the pool?”. It was supposed to show you the top 5 most active file transfers in real-time.

The original back-end file performance implementation was written using ETW (the same thing that the Windows Resource Monitor uses).

Unfortunately it had some problems:

- It was kind of slow because it was difficult to parse the required I/O in real-time.

- Ongoing I/O that was started prior to you opening up the performance UI pane would not be shown.

- There was a 2 second delay before we could show you the file I/O.

- Some I/O was missed outright due to the cache manager’s lazy writer / read ahead functionality.

- What was shown in File Performance was not in sync with what was shown in Pool Performance (I’ve alluded to this when I first introducing this feature).

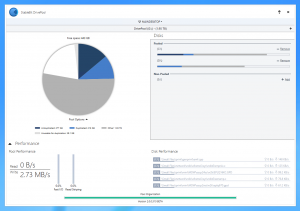

Introducing Disk Performance

Well, the old code that handled File Performance was scrapped.

A completely new high performance design was created that works intimately with the CoveFS file system to give you instant file performance.

And now it’s called Disk Performance.

From the user’s point of view, the UI is similar except that it’s more accurate and timely.

But here are the functional differences:

- Performance data is sampled every one second and is not delayed. You are seeing near real-time activity and not a historic average.

- All I/O that goes through the pool is recorded. Cached, uncached and Fast I/O is always included. We sample everything.

- Performance sampling is very efficient. There is no need to parse anything. The file system keeps track of all of the statistics and gives us exactly what we request.

- There is virtually no overhead. The file system only keeps samples of file performance if it was queried for the data within the past 60 seconds.

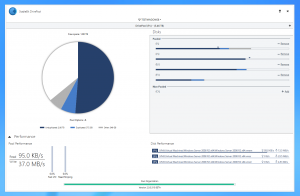

Accuracy

We now have much greater accuracy.

For example, in the above screen you can see an ongoing throttled file copy. The file copy was throttled to 1.0 MB/s using SyncBack SE.

You can see that the number reported by DrivePool is very close to 1.0 MB/s and that the Pool Performance statistics are now completely consistent with Disk Performance.

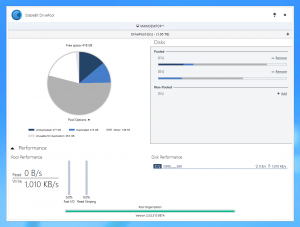

Live Transfers

Because of the increased responsiveness of the new design, DrivePool can now actually show you whether the file transfer listed in the Disk Performance pane was ended recently.

For example, in the above screenshot we can see that all of the disk activity is grayed out. This means that the activity is Historic. In other words, the disk activity has already ended by the time that we’ve asked for the sample. By default DrivePool samples performance data once per second (which can be tweaked in the service .config).

Windows 8.1 / Windows Server 2012 R2

The current build of DrivePool is not compiled for the Windows 8.1 kernel, so it’s not compatible with the Windows 8.1 preview that Microsoft released last week.

While I would have liked to build a Windows 8.1 compatible version of DrivePool for this release, unfortunately the development tools that Microsoft released are not functioning correctly on the build machine (well, they’re crashing).

To be fair these are “Preview” tools and I’m sure that they will fix these issues before the final.

So I’d just like to mention that Windows 8.1 support is coming to DrivePool.

Other Issues / Feature Requests

There are a number of other issues and feature requests that people have been making.

For example:

- Reparse points are currently not supported on the pool. This means that you can’t create symbolic links on the pool. Stay tuned, this is being worked on.

- The Microsoft NFS Server doesn’t work from the pool on Windows Server 2012. This is also being addressed. Perhaps the 2012 NFS server requires reparse point support, I don’t know at this point. But it will be addressed.

The next build of DrivePool 2.X will concentrate on fixing / refining any remaining issues in the service and the UI.

Areas that are of interest are:

- Refining the per-folder duplication UI.

- Testing remote control more thoroughly and fixing any remaining issues there.

- Showing better error messages when adding / removing disks fails.

You can download the latest build of StableBit DrivePool here: http://stablebit.com/DrivePool/Download

Until next time.